Marketing is a combination of art and science. The best marketing campaigns involve plenty of creativity, but they also rely on data and testing to ensure their success. With A/B testing, marketers can take the guesswork out of their email marketing campaigns by testing specific elements with a small group of recipients (Group A and Group B) and then send the campaign with the best performing elements to the rest of their audience.

Every single element that makes up an email can be tested, but the most commonly tested elements are:

- Call-to-action

- Content

- Subject line

- Personalisation

- Time of day and week

Before we get started, keep in mind that with any good scientific test there must be a hypothesis and a control group so the results can be measured accurately. For example, you may have an assumption that your audience would prefer to click on a green button rather than a red button. That is your hypothesis. After you have your hypothesis, organise your database into the following groups:

- 10% control group

- 10% group A

- 10% group B

- 70% remaining audience

The control group will receive your email as per usual. Group A will receive one element, Group B will receive the second element and whichever is the most successful can be sent to the remaining audience.

One of my favourite blogs on A/B testing is Behave.org (formerly Which Test Won), where you can view a real world A/B test and guess which one was successful. It’s a great way to challenge your assumptions about what recipients respond well to and start to think about what sort of tests you can run for your own email marketing campaigns. So let’s get started.

Call-to-action

What are you offering your readers to entice them to your website? A blog post? Webinar? Competition? Product promotion? We’ll discuss content formats later in this blog, so for now let’s talk buttons. How are you labelling your buttons to tell your reader what to expect if they click through? The text you use can make a big difference.

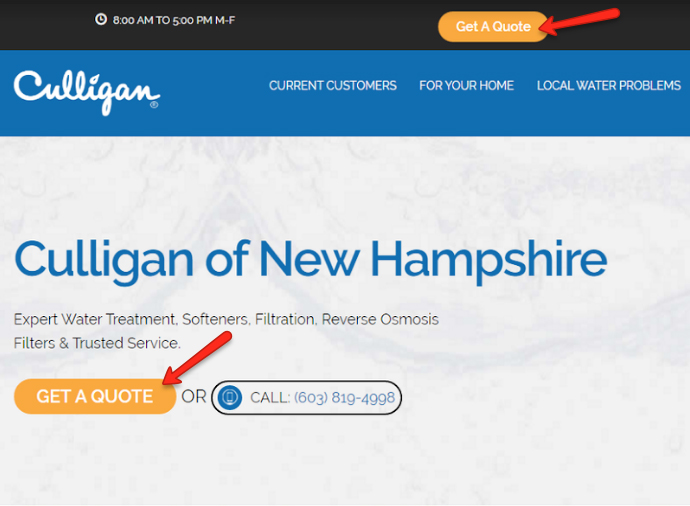

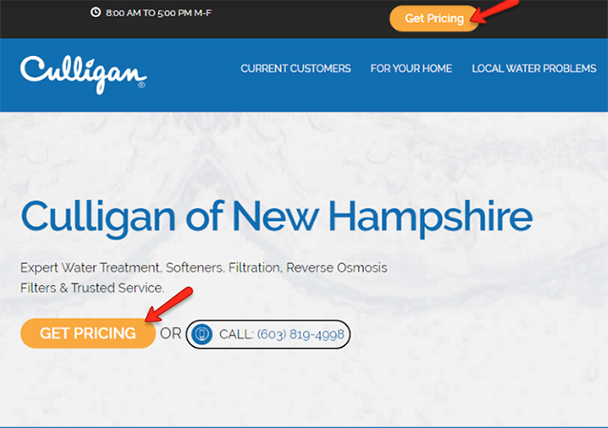

Behave.org shared an example of this where a B2C company in the United States wanted to test whether the words “get a quote” would perform better than “get pricing” as their call-to-action. The team’s hypothesis was that “get a quote” would win, as it focuses more on the customer service aspect rather than just the cost and would therefore establish more trust. Can you guess which test won?

As the team suspected, Version A won, leading to a 104% increase in form submissions. The majority of recipients responded better to a call-to-action that indicated a more tailored, service-focused approach rather than an impersonal price list.

When it comes to call-to-actions, consumers don’t like surprises. They want to know exactly what they’re getting if they click that button. With the right message at the right time, you will show that you can offer genuine assistance and solve their problem.

Subject lines

The same goes for subject lines. It is important to spark the reader’s interest while being clear about what to expect from your email.

B2B bloggers, Optimizely, shared their own A/B test on subject lines for an email containing an ebook for download. Their hypothesis was that readers would be more likely to click on the “download ebook” call-to-action button in the email if they were expecting an ebook before they opened the email.

They were right. The subject line, “[Ebook] Why You’re Crazy to Spend on SEM But Not A/B Testing” resulted in 30% more button clicks than the same subject line without “[Ebook]”.

We recently performed our own subject line test for our On Trend newsletter. Our hypothesis was that a subject line summarising the contents of the email rather than simply stating the title of the newsletter, On Trend, would generate a higher open rate and click through rate. The results showed an improvement in open rates (5% increase) and click through rates (50% increase).

Some businesses find value in keeping their subject lines consistent so their recipients recognise it in their inboxes each time. Others may find value in changing it each time depending on the content. It’s important to test these things for yourself over time so you end up with a result that works best for your particular audience.

Content

The format of your email content can make a big difference in engagement rates. It’s easy to make assumptions about whether your audience prefers video, webinars, eBooks, blogs or infographics, but until you test them you won’t know for sure.

Try publishing content in two different formats in an A/B test to see which format performs best in your email. For example, you might be conducting an interview with an industry expert. Record it in video format and publish it in a written blog format and see which wins the A/B test. You may even test different topics such as tips and tricks, interviews, thought leadership articles, fun quizzes, or company culture pieces.

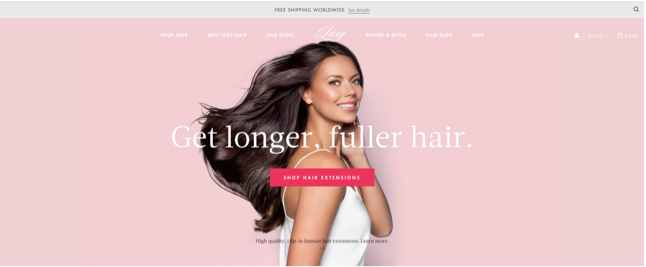

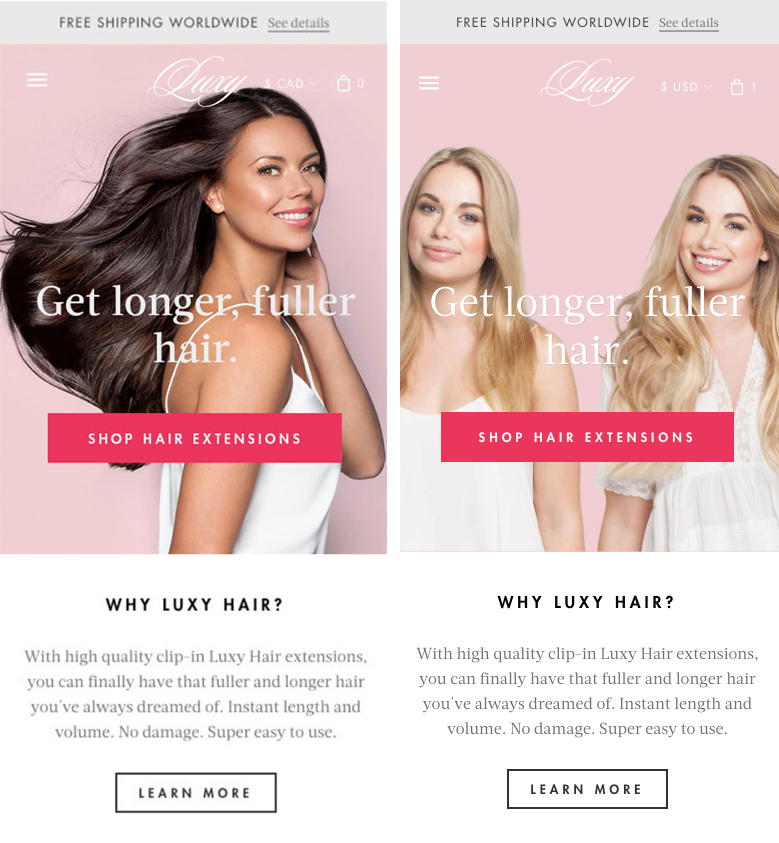

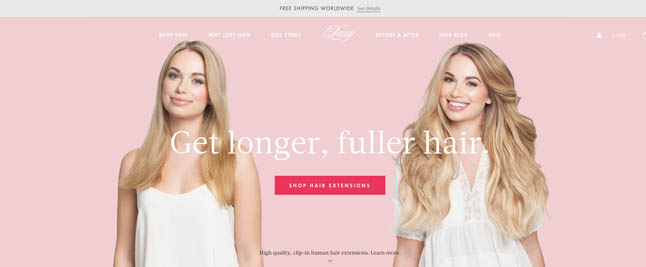

It’s also interesting to consider the impact device has on content engagement. Behave.org published an AB test for a hair care brand that compared two different hero images on desktop and mobile. The hypothesis was that while the ‘before and after’ hero image may help to see the product in action, it may be too cluttered for mobile view and deter shoppers from clicking through.

The results confirmed the team’s hypothesis. The ‘before and after’ image increased the click through rate by almost 8% on desktop, but decreased by almost 1% on mobile. This may not sound like much, but it was reported that the decrease in CTR would mean a 27.69% drop in overall revenue for the business.

To perform a test like this in the Vision6 platform, send two different hero images to audience A and audience B, and check your report to see how the recipient’s device impacts click-through-rates. While there are ways to create dynamic content based on device, it requires coding skills. An easy solution is to select content that works across multiple devices rather than specific to only one.

Personalisation

The great thing about email is its ability to reach your audience on a one-to-one level. With personalisation, you can nurture that connection and incite action from your readers.

Try adding personalisation to your subject line in an A/B test to see if it improves open rates. To do this, check out how to use wildcards in the Vision6 platform.

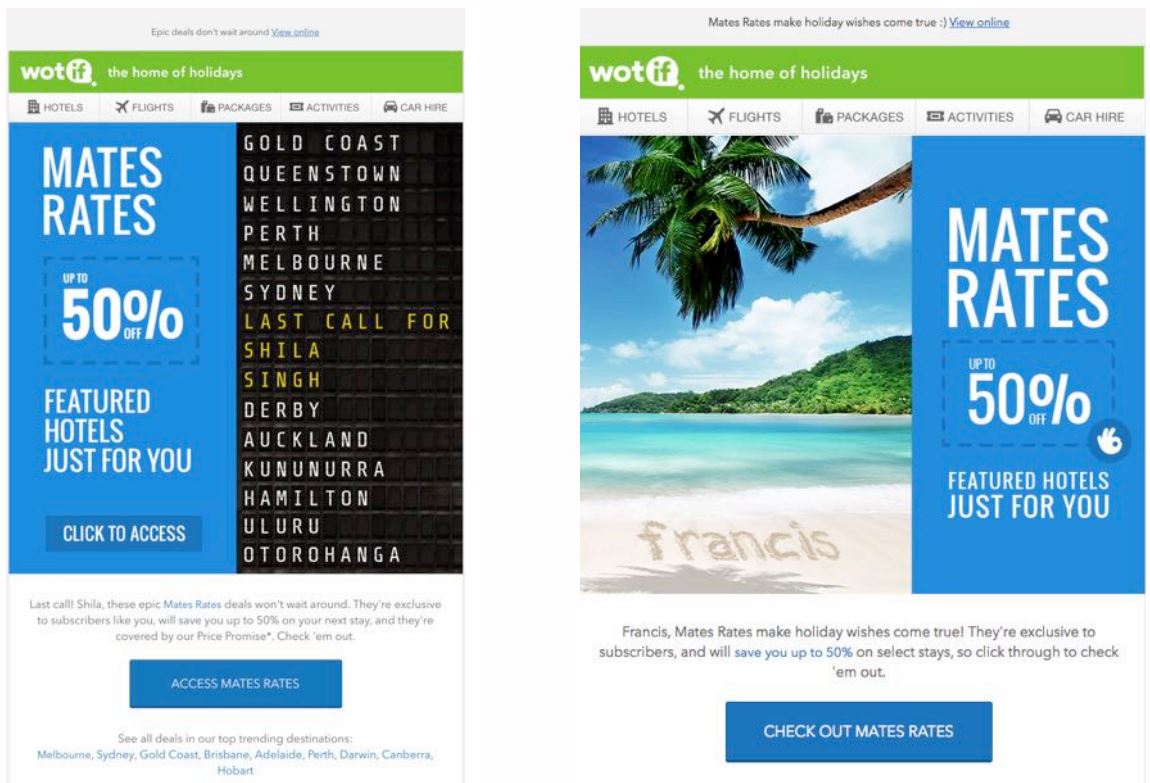

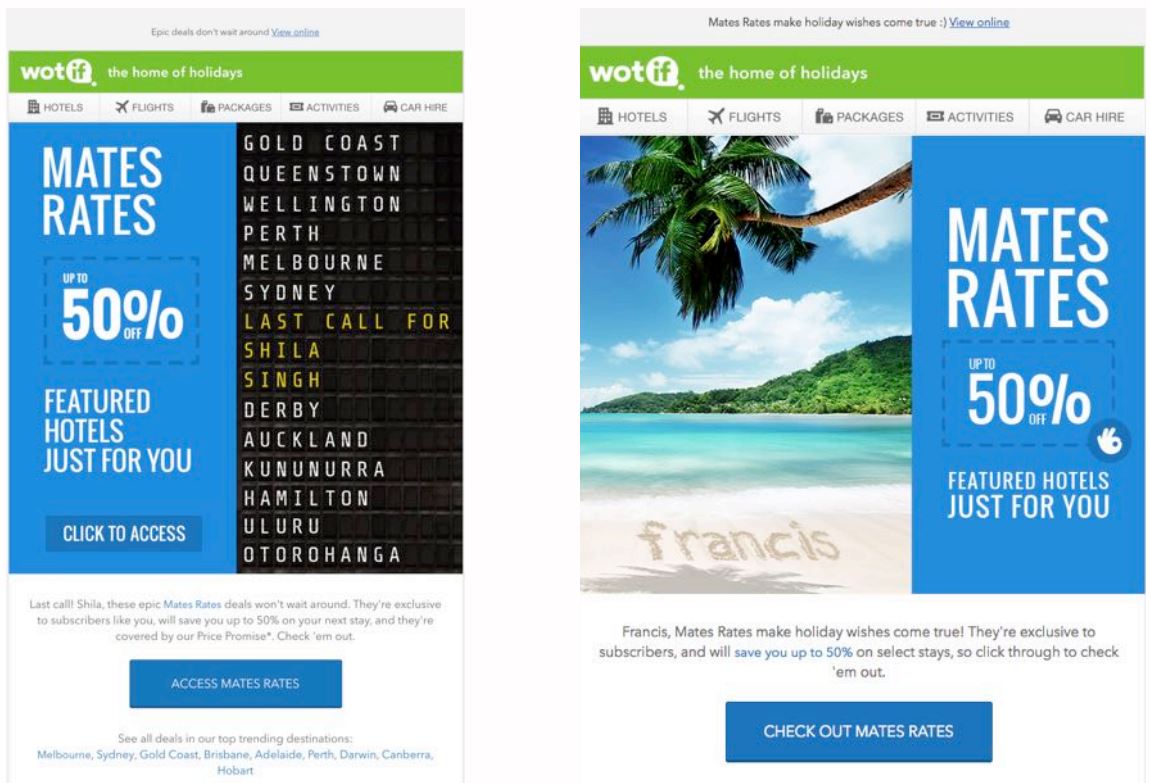

Use conditions to test dynamic content based on CRM information. It could be gender, age, location, interests, purchase history, or any other segment that you’ve developed. Wotif Senior Digital Marketer, Adrian Westwood, shared a great case study on dynamic content at EMSA two weeks ago. The goal was to surprise and delight the Wotif audience and increase email engagement with a personalised email. The email campaign promoted “mates rates” on hotels and the test compared an airport flight board design with a personalised photograph of a tropical beach with the recipient’s name written in the sand.

Adrian proudly informed the EMSA audience that the personalised email boosted engagement significantly. He explained that there are two key factors that drive the desire for personalised experiences: the desire for control and the attraction to content that cuts through the masses of information we are presented with on a daily basis. If you can connect with your audience on a personal level, you have more of an opportunity to build a better relationship long-term.

Time of day / week

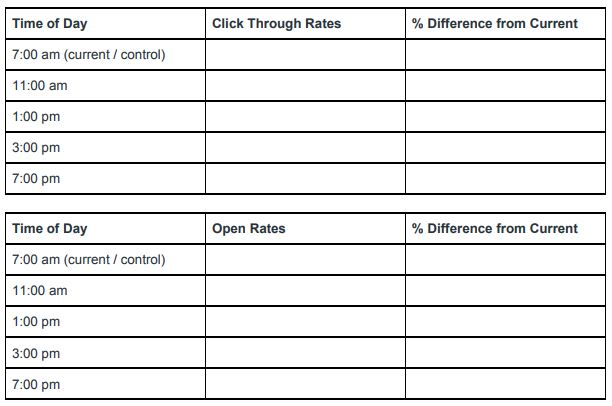

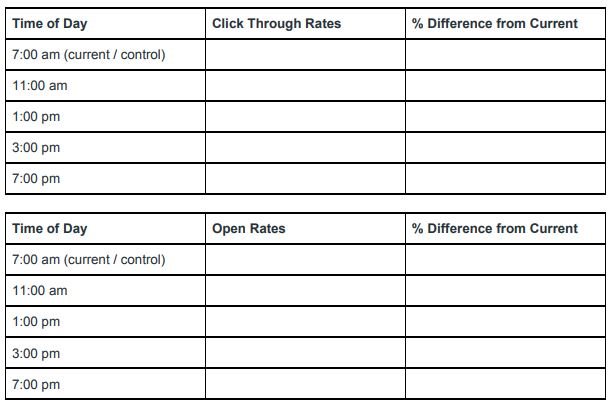

The best time to send email newsletters is something that should definitely be tested as it is different for every business. Keep a table of results over time so you can track which time yields the best open rates or click through rates. For example:

You can also test days of the week and weekends to see how that affects your email’s performance. There are quite a few different combinations of day of the week and time of day, but over time you will come to learn when your audience prefers to receive communication from you.

Getting the frequency of your emails right is another great thing to test. Does weekly, fortnightly or monthly work best for your audience? Remember to keep one group as a control, receiving your newsletter as per usual so you can compare the difference in results.

Conclusion

A/B testing is a great way to make sure you’re communicating with your audience in the most effective way. Some would argue that there are limitations, such as focusing too much on the majority despite a potentially large group of people still favouring the “losing” option. However, most marketers would agree that it is important to test, ask questions and optimise wherever possible and A/B testing is a great way to introduce this way of thinking into your marketing practice.

Keep an eye out for the upcoming A/B testing feature release in your Vision6 account!